The Ultimate Guide to Successful AB Testing: Examples and Tips

A/B testing compares two versions of a webpage, email, or marketing asset to find out which one performs better. It helps you make data-driven decisions by showing different versions to separate audience segments. This guide explains the basics, importance, and steps to conduct A/B testing effectively.

Key Takeaways

-

A/B testing involves comparing two versions of a marketing asset to evaluate performance and enhance user engagement through data-driven decisions.

-

Establishing clear goals and hypotheses is critical for successful A/B testing, guiding the structure and focus of tests to achieve meaningful results.

-

Integrating A/B testing into your marketing strategy allows for continual optimization and adaptation to changing customer preferences, improving overall marketing effectiveness.

What is AB Testing?

A/B testing, commonly referred to as split testing, involves comparing two versions of a webpage, email, or marketing asset. The goal is to determine which version yields better performance. Splitting your audience and presenting each group with a different version allows you to measure performance based on key metrics like conversion rates and engagement time. This method helps businesses identify the most effective strategies to engage their audience and drive desired actions through a split test.

The beauty of A/B testing lies in its flexibility. You can test anything from simple changes like headlines and buttons to complete page redesigns. The goal is to provide the best customer experience by making data-driven decisions. Careful analysis of test results enables informed changes that enhance user engagement and satisfaction.

The Importance of AB Testing

Why is A/B testing crucial for your marketing strategy? First, it allows you to validate or refute assumptions about consumer behavior through data, significantly reducing the risk of marketing failures. Regularly conducting A/B tests enables brands to stay competitive by continually optimizing their strategies, leading to more revenue and a better customer experience.

Moreover, A/B testing provides a systematic approach to improving marketing efforts. For instance, a successful email marketing campaign often relies on data-driven insights to optimize subject lines, content, and send times. Setting clear objectives and focusing on areas that need improvement drives significant enhancements in marketing performance, helping you achieve your business goals.

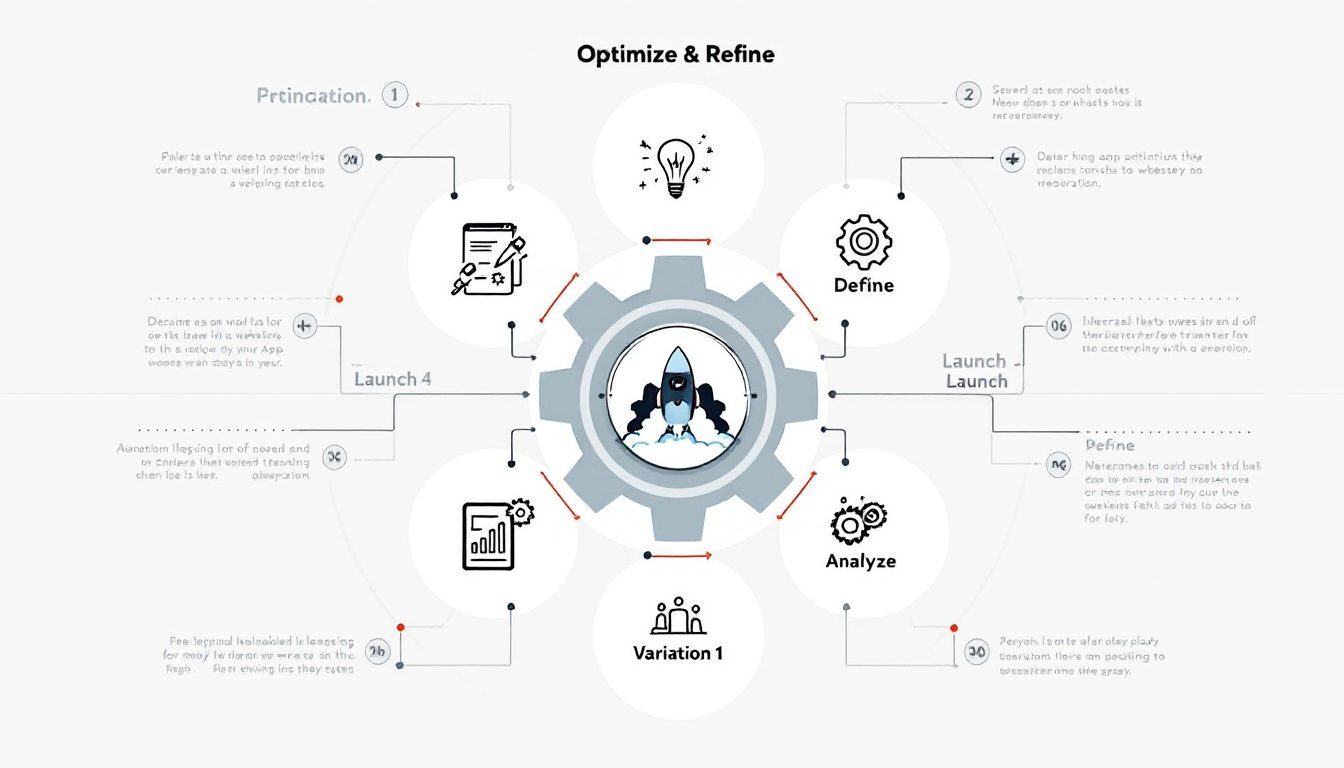

How to Conduct AB Testing

Several key steps are crucial for obtaining reliable and actionable insights in A/B testing. The process begins with defining your goals and hypotheses, creating variations, and then running the tests.

These steps ensure that your A/B testing is structured, focused, and yields meaningful results.

Define Your Goals and Hypotheses

Setting clear goals is the foundation of any successful A/B test. Before you start, identify what you aim to achieve and establish quantifiable objectives. For example, you might aim to increase click-through rates on a landing page or boost the open rates of an email campaign. These clear goals will help you formulate a test hypothesis, guiding your evaluation and measurement of success.

Once you have your goals, develop hypotheses that specify the expected outcomes of your changes. For instance, if your goal is to increase conversions, your hypothesis might be that a more prominent call-to-action button will lead to higher click-through rates. These hypotheses will direct your testing efforts and help you determine the most effective strategies to achieve your business goals.

Create Variations

Creating variations is a critical step in A/B testing. Focus on changing one element at a time to isolate its impact on performance. Whether it’s a different headline, a new image, or a redesigned button, each variation should be tested against the original version to measure its effectiveness accurately.

This approach ensures that you can pinpoint which specific change drives the desired action.

Run the Test

To obtain reliable results, segment your audience randomly and run the A/B test for a sufficient duration, typically at least two weeks. This period allows enough visitors to interact with each variant, ensuring that your test results are statistically significant. Monitoring the test over this period helps you gather enough data to compare the performance of the variants accurately.

Throughout the testing phase, track key metrics aligned with your objectives. Measure how each variant performs in terms of the desired actions, such as clicks, sign-ups, or purchases. This data-driven approach allows you to determine which version is more effective and make informed decisions based on the test results.

Analyzing AB Test Results

Once the test is complete, analyzing the results is essential to draw meaningful conclusions. Key metrics to evaluate include conversion rates, which indicate how many users took the desired action after exposure to the test variants. Tracking metrics aligned with your specific business goals ensures that your analysis is relevant and actionable.

Statistical significance is crucial in A/B testing. It helps confirm that the observed differences between test variants are reliable and not due to random chance. Even if an A/B test does not result in a clear winner, the insights gained can still refine your marketing strategies and improve user experience.

Successful examples like Groove and Highrise demonstrate how effective A/B testing can lead to significant improvements in conversion rates and user engagement.

Examples of Successful AB Tests

Real-world examples highlight the power of A/B testing in optimizing marketing strategies and improving user engagement.

By examining these success stories, you can gain valuable insights into how A/B testing can transform your marketing efforts.

Homepage Design

A notable example involves a homepage A/B test where the presence of a dog image significantly increased user engagement. Visitors who saw the dog consumed the content three times more than those who did not, illustrating how a simple change can enhance the user experience and drive traffic.

Email Subject Lines

In the realm of email marketing, tweaking subject lines can have a profound impact. Campaign Monitor’s A/B test on personalized subject lines resulted in a 26% increase in open rates. This example underscores the importance of personalization in successful email marketing campaigns. Testing different subject lines while keeping the rest of the email content constant helps marketers identify the most effective approaches to engage their audience.

Using power words in subject lines is another effective strategy. These words can significantly influence open rates, making your email stand out in a crowded inbox. Such tweaks, supported by A/B testing, can drive better engagement and higher conversion rates in email marketing campaigns.

Using Analytics in AB Testing

Analytics play a pivotal role in A/B testing, providing the data needed to measure key metrics like open rates, click-through rates, and conversion rates. Tools like Google Analytics can help track these metrics, offering insights into user behavior and test performance. Segmenting visitors, such as new versus returning, provides a deeper understanding of how different groups respond to your variations.

Monitoring retention rates can also reveal which A/B test variants encourage users to return and engage with your website. These insights enable you to make data-driven decisions, optimizing your marketing strategies for better results and higher customer data satisfaction.

Common Pitfalls in AB Testing

While A/B testing is a powerful tool, it’s important to be aware of common pitfalls to avoid. One frequent mistake is not formulating a precise hypothesis before starting the test, which can lead to conclusions that are ultimately proven wrong, resulting in a focus on irrelevant metrics. Failing to iterate on hypotheses after tests can prevent further optimization and a deeper understanding of user behavior.

Another common issue is not considering the customer journey, which can result in testing elements that don’t significantly affect conversion rates. Additionally, running tests without a sufficiently large user base or stopping tests too early can lead to inconclusive or inaccurate results. Properly documenting tests and avoiding multiple changes at once are also crucial to ensuring clear and actionable insights.

When to Use Multivariate Testing

Multivariate testing differs from A/B testing by allowing multiple elements to be tested simultaneously, making it ideal for assessing the impact of major changes without a complete redesign. This method can save time by streamlining the testing process and providing more comprehensive insights into which elements most influence conversion rates.

However, multivariate testing requires a larger sample size due to the increased number of variations being tested. This is a critical consideration for effective implementation, ensuring that your test results are statistically significant and reliable.

Best Practices for AB Testing

To get the best results from A/B testing, follow these best practices. Target specific audience segments to gain more meaningful insights and ensure your tests run for a minimum of 1-2 weeks to gather sufficient data. Timing is also crucial; avoid testing during periods with significant seasonal variances that could skew your results.

Adhering to these practices enhances the effectiveness of your A/B testing efforts, leading to improved marketing strategies and better business outcomes.

Integrating AB Testing into Your Marketing Strategy

Integrating A/B testing into your marketing strategy provides continuous insights and recommendations to enhance performance and customer experience. Regularly running tests allows you to adapt your strategies as customer preferences evolve, ensuring your marketing efforts remain relevant and effective.

A/B testing is particularly straightforward and effective in email marketing, where binary responses make it easier to measure outcomes. Tracking metrics like conversion rates and using dynamic content tailored to recipient preferences helps optimize email campaigns for higher engagement and conversion rates.

Summary

A/B testing is an invaluable tool for optimizing marketing strategies and improving user engagement. By setting clear goals, creating focused variations, and running well-structured tests, you can make data-driven decisions that enhance customer satisfaction and drive more revenue. Analyzing test results and learning from successful examples can further refine your approach, avoiding common pitfalls and employing best practices.

As you integrate A/B testing into your marketing strategy, continuous testing and adaptation will keep your efforts aligned with evolving customer preferences. Embrace A/B testing to unlock new opportunities for growth and success in your marketing endeavors.

Frequently Asked Questions

What is the main purpose of A/B testing?

The main purpose of A/B testing is to compare two versions of content to identify which one yields better performance and user engagement. This process helps optimize marketing strategies effectively.

How does A/B testing reduce the risk of marketing failures?

A/B testing effectively reduces the risk of marketing failures by allowing you to validate assumptions with real data, leading to informed decisions based on concrete test results. This approach minimizes guesswork and enhances the likelihood of success in your marketing strategies.

What are some key metrics to track in A/B testing?

To effectively evaluate A/B testing outcomes, focus on tracking conversion rates, open rates, click-through rates, and retention rates, as these metrics provide valuable insights into performance variations.

How long should an A/B test be run?

An A/B test should be run for at least two weeks to ensure statistical significance and gather sufficient data for reliable results. This timeframe allows for more accurate insights into performance variations.

When should multivariate testing be used instead of A/B testing?

Multivariate testing is the preferred choice when you need to evaluate multiple variables at once, particularly for significant updates rather than a full redesign. However, it demands a larger sample size to yield reliable results.

Are you interested in finding out more? Browse the rest of our blog for other marketing tips. If you’re ready to create your first email, survey, sign-up form, or landing page then register for a free trial to get the tools you need to build powerful marketing campaigns!

© 2024, VerticalResponse. All rights reserved.